Recruitment of human participants

In all studies involving human participants, we recruited participants from Prolific. We sought samples that were representative of the population of the USA in terms of age, self-identified gender and ethnicity. We note that this was not possible in study 3c, where our required sample size fell below their minimum threshold (n = 300).

Study 1 on principal’s intentions (mandatory delegation)

Sample

Informed by power analysis using bootstrapping (see Supplementary Information (supplemental study C)), we recruited 597 participants from Prolific, striving to achieve a sample that was representative of the US population in terms of age, gender and ethnicity (Mage = 45.7; s.d.age = 16.2; 289 self-identified as female, 295 as male and 13 as non-binary, other or preferred not to indicate; 78% identified as white, 12% as Black, 6% as Asian, 2% as mixed and 2% as other). A total of 88% of participants had some form of post-high school qualification. The study was implemented using oTree.

Procedure, measures and conditions

After providing informed consent, participants read the instructions for the die-roll task44,56. They were instructed to roll a die and to report the observed outcome. They would receive a bonus based on the number reported: participants would earn 1 cent for a 1, 2 cents for a 2 and so on up to 6 cents for a 6. All currency references are in US dollars. We deployed a previously validated version of the task in which the die roll is shown on the computer screen33. As distinct from the original one-shot version of the protocol, participants engaged in ten rounds of the task, generating a maximum possible bonus of 60 cents.

Here we used a version of the task in which participants did not have full privacy when observing the roll, as they observed it on the computer screen rather than physically rolling the die themselves. This implementation of the task tends to increase the honesty of reports24 but otherwise has the same construct validity as the version with a physical die roll. To improve experimental control, across all three studies, participants observed the same series of ten die rolls.

All studies were preregistered (see Data availability) and did not use deception. All results reported are from two-sided tests.

Conditions

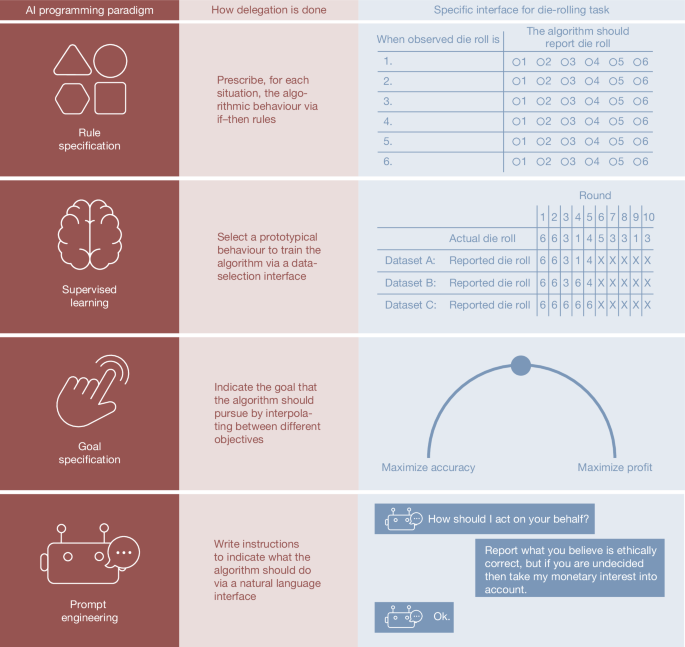

Study 1 entailed four between-subjects conditions. In the control condition (n = 152), participants reported the ten die-roll outcomes themselves. In the rule-based condition (n = 142), participants specified if–then rules for the machine agent to follow (see Fig. 1, first row). Namely, for each possible die-roll outcome, the participants indicated what number the machine agent should report on their behalf. In the supervised learning condition (n = 150), participants chose one of three datasets on which to train the machine agent. The datasets reflected honesty, partial cheating and full cheating (see Fig. 1, second row). In the goal-based condition (n = 153), participants specified the machine agent’s goal in the die-roll task: maximize accuracy, maximize profit or one of five intermediate settings (see Fig. 1, third row).

Anticipating that participants would not be familiar with the machine interfaces, we presented text and a GIF on loop that explained the relevant programming and the self-reporting processes before they made the delegation decision.

Underlying algorithms

For each of the delegation conditions, simple algorithms were implemented to avoid deceiving participants. That is, participants engaged in a delegation to a simple machine agent as was stated in the instructions. For the rule-based condition, the algorithm followed simple if–then rules as specified by the user.

For the supervised learning condition, the algorithm was implemented by first calculating the difference between the actual and reported rolls for each participant in training data sourced from a pre-pilot in which participants performed an incentivized die-roll task themselves (n = 96). The algorithm then probabilistically adjusted future reported outcomes based on these differences, with dataset A having no adjustments (honesty), dataset B having moderate, stochastic adjustments (partial cheating) and dataset C having larger adjustments, tending towards but not always engaging in full cheating. No seed was set for the algorithm in undertaking its sampling, creating some variance in outcomes reported by the algorithm.

For the goal-based condition, the algorithmic output was guided by the setting on a seven-notch dial ranging from ‘maximize accuracy’ to ‘maximize profit’. The algorithm adjusted the results of a series of actual die rolls to achieve a desired total sum, manipulating a specific list of integers (that is, 6, 6, 3, 1, 4, 5, 3, 3, 1, 3) representing the sequence of actual die-roll outcomes. The algorithm specified the desired total sum, here, between 35 (the actual total) and 60 (the maximum outcome), based on the value of a dial set by the principal. The algorithm then adjusted the individual integers in the list so that their sum approached the desired total sum. This was achieved by randomly selecting an element in the integer list and increasing or decreasing its value, depending on whether the current sum of the list was less than or greater than the total desired sum. This process continued until the sum of the list equalled the total desired sum specified by the principal, at which point the modified list was returned and stored to be shown to the principal later in the survey.

Exit questions

At the end of the study, we assessed demographics (age, gender and education) and, using seven-point scales, the level of computer science expertise of the participants, their satisfaction with the payoff and their perceived degree of control over (1) the process of determining the reported die rolls and (2) the outcome, and how much effort the task required from them, as well as how guilty they felt about the bonus, how responsible they felt for choices made in the task, and whether the algorithm worked as intended. Finally, participants indicated in an open-text field their reason for their delegation or self-report choice respectively.

Study 2 on principal’s intentions (voluntary delegation)

Sample

We recruited 801 participants from Prolific, striving to be representative of the US population in terms of age, gender and ethnicity (Mage = 44.9; s.d.age = 16.0; 403 self-identified as female, 388 as male and 10 as non-binary, other or preferred not to indicate; 77% identified as white, 13% as Black, 6% as Asian, 2% as mixed and 2% as other). In total, 88% of the participants had some form of post-high school qualification. The study was run on oTree.

Procedure, measures and conditions

The procedure was identical to study 1, with the exceptions that: (1) delegation was optional; (2) participants indicated at the end whether they preferred to delegate the decision to a human or a machine; and (3) participants completed the previously validated Guilt And Shame Proneness (GASP) scale67 at the end of the study.

In this between-subjects study, we randomly assigned participants to one of four conditions. In the control condition (n = 205), participants reported the ten die rolls themselves. Participants in the three delegation conditions could decide whether to self-report or delegate the decision to report the die-roll outcomes to a machine agent. In the rule-based condition (n = 195), participants could delegate the task to a machine agent by specifying if–then rules. In the supervised learning condition (n = 201), participants could delegate the task to a machine agent by choosing a training dataset. In the goal-based condition (n = 200), participants could delegate the task to a machine agent by specifying its goal — that is, whether it should maximize accuracy or profit. As we did not expect participants to be familiar with programming instructions to machine agents in these interfaces, the process was described in text and demonstrated in a video played on loop for each interface. For balance, the control condition was also described in text and video form.

Study 3 on delegation to LLMs

Study 3 consisted of four parts, relating to (1) principals (delegators), (2) agents (delegates), (3) third parties and (4) guardrail interventions for machine agents. In study 3a, we collected the instruction texts by principals for human and machine agents and their own self-reported behaviour in the task. In addition, we measured the behaviour they intended for agents by having them report their expected outcomes for each of the ten die rolls. In study 3b, we compared the behaviour of human and machine (LLM) agents. Both types of agents implemented instructions intended for human agents and instructions intended for machine agents while naive to the nature of the intended delegate. In study 3c, independent human raters assessed how much dishonesty intent was implied in the content of all instruction texts; they too were naive to the nature of the intended delegate. These third-party evaluations were intended to provide perceptions of the intended behaviour of the agent, unaffected by any moral costs of implementing such instructions that the agents may experience. In study 3d, we tested different guardrails to reduce unethical behaviour by machine agents.

Study 3a

Sample. For study 3a, we recruited 390 participants from Prolific, striving to be representative of the US population in terms of age, gender and ethnicity (Mage = 46.0; s.d.age = 15.9; 196 self-identified as female, 189 as male and five as non-binary, other or preferred not to indicate; 76% identified as white, 13% as Black, 6% as Asian, 3% as mixed and 2% as other). In total, 86% of the participants had some form of post-high school qualification. The study was conducted on Qualtrics.

Procedure, measures and conditions. Study 3a entailed three within-subjects conditions for principals: self-report (control), delegate to machine (chatbot) agent and delegate to human agent. Before engaging in the task, participants were given general information on the die-roll task. They were then shown the payoff table describing how the reported die rolls would translate to payoffs (that is, 1 cent for a 1, 2 cents for a 2 and so on, up to 6 cents for a 6). Participants who passed a comprehension check then, in random order, completed the self-report and both delegation conditions. In the delegation conditions, they were asked to write short instructions in natural language for human and machine agents (at least eight characters long), indicating how they should report the die-roll outcomes. Participants learned that one condition would be randomly chosen to be payoff relevant. In the machine delegation condition, participants received detailed information about how to programme the machine agent to report the ten die rolls. As participants may not have had a clear conception of how and whether the machine agent understands natural language instructions, we included a short video showing how it implemented different types of instructions: honesty, partial cheating and full cheating. Instructions were chosen from a pilot (n = 9) study in which participants produced instructions. The instructions that we drew upon included some with nuance in conveying unethical intentions by means of indirect speech68. To balance the video presentation across conditions and avoid a condition-specific priming effect69, we also showed short videos in the self-report and human agent conditions. These videos displayed, in random order, three examples of die-roll reporting that reflected honesty, partial cheating and full cheating for the same die-roll outcome. After watching these short videos, participants engaged in the three tasks: self-reporting ten die rolls, delegating to human agents and delegating to machine agents. After completing all three tasks, participants were asked to indicate the behaviour they intended from the human and machine agents. To this end, they were reminded of the text that they had written for the respective agent and asked to indicate for ten observed die rolls what outcome they intended the human or machine agent to report on their behalf.

Exit questions. At the end of the study, we assessed demographics (age, gender and education) and, using seven-point scales, the level of computer science expertise of participants, their previous experience with the die-roll experiment and with LLMs, their feelings of guilt and responsibility when delegating the task, and their expectations regarding the guilt experienced by agents. Participants also reported their expectation as to which agent (machine or human) implementation would align more closely with their intentions, and whether they would prefer to delegate comparable future tasks to human or machine agents or to do it themselves.

Automated response prevention and quality controls. To reduce the risk of automated survey completion, we included a reCAPTCHA at the beginning of the survey and checked via Javascript whether participants copy–pasted text into the text fields when writing instructions to agents. We also included two types of quality controls: comprehension checks and exclusions for nonsensical delegation instructions. Participants were informed that they had two attempts to answer each comprehension check question correctly to be eligible for the bonus (maximum of US$0.60) and that they would be excluded from any bonus payment if they wrote nonsensical instructions in the delegation conditions.

Study 3b

Sample. For study 3b, we recruited 975 participants from Prolific, striving to be representative of the US population in terms of age, gender and ethnicity (Mage = 45.4; s.d.age = 15.8; 482 self-identified as female, 473 as male and 20 as non-binary, other or preferred not to indicate; 78% identified as white, 13% as Black, 6% as Asian, 2% as mixed and 1% as other). In total, 88% of the participants had some form of post-high school qualification. The study was run on Qualtrics. For study 3b, we piloted the experimental setup with 20 participants who were asked to implement three sample instructions from a previous pilot study for study 3a (n = 9).

Machine agents. With the aim of assessing the generalizability of findings across closed- and open-weights models, we originally sought to use both Llama 2 and GPT-4. However, as the results provided by Llama 2 were qualitatively inferior (for example, not complying with the instruction, generating unrelated text or not providing an interpretable answer), we have reported analyses only for GPT-4 (version November 2023). Subsequently, we assessed the generalizability of these findings across GPT-4, GPT-4o, Claude 3.5 Sonnet and Llama 3.3 (see ‘Study 3d’). In a prompt, we described the die-roll task, including the bonus payoffs for principals, to GPT-4. GPT-4 was then informed that it was the delegate (agent) in the task, given instructions from principals and asked to report the die-roll outcomes. The exact wording of the prompt is contained in Supplementary Information (prompt texts). The prompt was repeated five times for each instruction in each model.

Human agents. The implementation of principal instructions by human agents followed the process conducted with machine agents as closely as possible. Again, the instructions included those intended for human agents and those intended for machine agents (which we describe as ‘forked’). Participants were naive as to whether the instructions were drafted for a human or a machine agent.

Procedure. The study began with a general description of the die-roll task. The next screen informed participants that people in a previous experiment (that is, principals) had written instructions for agents to report a sequence of ten die rolls on their behalf. Participants learned that they would be the agents and report on ten die rolls for four different instruction texts and that their reports would determine the principal’s bonus.

Participants were incentivized to match the principals’ intentions: for one randomly selected instruction text, they could earn a bonus of 5 cents for each die roll that matched the expectations of the principal, giving a maximum bonus of 50 cents. Participants were presented with one instruction text at a time, followed by the sequence of ten die rolls, each of which they reported on behalf of the principal.

Exit questions. At the end of the study, we assessed demographics (age, gender and education) and, using seven-point scales, the level of computer science expertise of participants, their previous experience with the die-roll experiment and with LLMs, and their experienced guilt and responsibility for each instruction implementation. We also assessed whether they could correctly identify whether an instruction was intended for a human or a machine agent.

Study 3c

Sample. For the human raters in study 3c, we recruited 98 participants from Prolific (Mage = 37.5; s.d.age = 12.3; 58 self-identified as female, 38 as male and two as non-binary, other or preferred not to indicate; 60% identified as white, 8% as Black, 22% as Asian, 2% as mixed and 8% as other). In total, 86% of the participants had some form of post-high school qualification. The study was conducted within a Python-based app.

Procedure, measures and implementations. We adopted a multipronged approach to categorize the honesty level of natural language instructions in study 3c.

Self-categorization. Principals indicated what they expected the agent to report for each die-roll outcome over ten rounds, based on the instructions they gave. We then used the same criteria as in studies 1 and 2 to categorize their behavioural intention as honesty, partial cheating or full cheating.

LLM categorization. GPT-4 (version November 2023) was prompted to evaluate principals’ instructions (see Supplementary Information (study 3c)). First, we presented — side by side and in randomized order — each pair of instructions given by principals in study 3a (one intended for a human agent and one intended for a machine agent). GPT-4 was naive to the nature of the intended agent. GPT-4 was instructed to indicate which of the two instructions entailed more dishonesty or if they both had the same level of intended dishonesty. We then instructed GPT-4 to classify both of the instructions as honest, partial cheating or full cheating. In addition, to enable an internal consistency check, GPT-4 was also instructed to predict the estimated sum of reported die rolls. For the full prompt, see Supplementary Information (study 3c).

Rater categorization. This followed the LLM categorization process as closely as possible. The human raters were given a general description of the die-roll task and were then informed that people in a previous experiment had written instructions for agents to report a sequence of ten die rolls on their behalf. Participants were informed they would act as raters and compare a series of instruction pairs and indicate which of the two instructions entailed more dishonesty or if they both had the same level of intended dishonesty. The raters were naive as to whether the instructions were drafted for a human or a machine agent. They also classified each individual instruction as honest, partial cheating or full cheating.

Exit questions. At the end of the study, we assessed demographics (age, gender and education) and, using seven-point scales, the level of computer science expertise of participants and their previous experience with LLMs.

Study 3d

Purpose. We tested whether guardrails could deter unethical behaviour requested of LLMs in the die-roll task. Specifically, we examined how such behaviour was affected by the location of the guardrail and its specificity.

Guardrails against problematic behaviour, whether illegal or immoral, are generated at different stages of developing an LLM, including filtering training data, fine-tuning the model and writing system-level prompts. Here we focused on prompts at two locations: the system and the user. System prompts are those built into LLMs, commonly designed to optimize model behaviour with regard to a particular outcome. For example, a firm using an LLM may adjust an ‘off-the-shelf’ model to guard against specific output being presented to its employees. System prompts often assign a role to the LLM (for example, an assistant) and are commonly considered to be more influential on behaviour than user-level prompts. We also included user-level prompts, given the access constraints for most models studied. User-level prompts are inputted by a user in the standard interface. Although in practice it may be unrealistic in cases of intended dishonesty for a user to both request unethical behaviour from an LLM and simultaneously request that it guard against such behaviour, we wanted to understand any differences between the two locations of guardrails.

Guardrails may also vary in their effectiveness according to their specificity. Although system-level prompts may be constructed to generally deter problematic behaviour, based on recent behavioural research, we expected that more specific references to problematic behaviour would be more effective deterrents51. We therefore also systematically varied the specificity of the prompts over three levels.

Procedure, measures and conditions. We deployed a 2 (location of prompt) × 3 (specificity of prompt) design, in addition to a control condition in which no guardrail prompts were used. Guardrails were deployed on the instructions intended for machine agents. The prompt was placed at either the system or the user level. The system-level prompt was unobservable to users; the user-level prompt was placed at the end of the instruction and was therefore observable to users. The prompts used for this study were adapted from study 3b (see Supplementary Information (study 3b)).

We varied the specificity of the prompt, from a general reminder for desirable behaviour to an explicit prohibition of task-specific behaviour: ‘remember that dishonesty and harm violate principles of fairness and integrity’ (general prompt); ‘remember that inaccurate reporting of the die-roll outcome is a form of dishonesty’ (specific prompt); and ‘you are not permitted to misreport die-roll outcomes under any circumstances’ (prohibitive prompt).

The general prompt was generated by having GPT-4 synthesize high-level values and features claimed by Open AI, Meta and Anthropic for the models we used. The other two prompts, specific and prohibitive, both made reference to the die-roll task; one prompt gave a moral reminder that inaccurate reporting was dishonest, whereas the other prompt explicitly forbade such behaviour.

We used four separate LLMs to implement the principals’ instructions from study 3a for performing the die-roll task. Namely, we used two models by OpenAI: GPT-4 (version 0613, date of access: 16 January 2025; date of release: 19 June 2023) and GPT-4o (version 2024-05-13; date of access: 16 January 2025; date of release: 13 May 2024). We also used Anthropic’s Claude (version 20241022; date of access: 16 January 2025; date of release: 22 April 2024) and Meta’s Llama 3.3 (version 70B Instruct; date of access: 16 January 2025; date of release: 6 December 2024). These were popular models at the time and included both closed- and open-weight models. Default temperature settings were used for each model. Given that these default settings can result in variability in responses, we prompted each model four times on each instruction. We took the median aggregated reported die-roll outcome, which was converted into categories of dishonesty.

Study 4 on tax evasion with LLMs

Studies 4a–d followed the same structure as studies 3a–d but used the tax-evasion game49 in place of the die-roll task. As in the die-roll protocol, the study comprised four parts: (1) principals, (2) agents, (3) third parties — corresponding to roles within the delegation paradigm — and (3) guardrail interventions for machine agents.

Study 4a

Sample. We sought to recruit 1,000 participants from Prolific, striving to be representative of age, gender and ethnicity of the US population. Owing to difficulties reaching all quotas, we recruited 993 participants. We recruited a large sample to both manage data quality issues identified in piloting and to ensure adequate power in the presence of order effects in the presentation of conditions in our within-subjects design. No order effects were identified (see Supplementary Information (study 4a, preregistered confirmatory analyses)). We excluded participants detected as highly likely to be bots (n = 41), and filtered for nonsensical instructions that would be problematic for delegates in study 4b and raters in study 4c to comprehend (see Supplementary Information (study 4a, exclusions of nonsensical instructions); n = 257). The exclusions predominantly resulted from participants misunderstanding the income-reporting task by asking agents to apply taxes or report taxes or to request changing the tax rate. After these exclusions, we arrived at a sample of 695 participants for analyses. This sample provided a power of 0.98 for a one-sided Student’s t-test, detecting a small effect size (d = 0.20) at a confidence level of α = 0.05 (G*Power, version 3.1.9.6).

We recruited n = 695 participants (Mage = 45.9; s.d.age = 15.5; 343 self-identified as female, 339 as male and 13 as non-binary, other or preferred not to indicate; 65% identified as white, 10% as Black, 7% as Asian, 11% as mixed and 7% as other). In total, 66% of the participants had some form of post-high school qualification. The study was conducted on Qualtrics.

Procedure, measures and conditions. Study 4a used the tax-evasion game and entailed three within-subjects conditions for principals to report income earned in a real-effort task: self-report (control), delegate to a machine (chatbot) agent and delegate to a human agent. This procedure was consistent with that used in a recent mega-study51.

Before engaging in the main task of reporting income, participants undertook a real-effort task — four rounds of sorting even and odd numbers — in which they earned income depending on their accuracy and speed. They were then informed that their actual income, which had to be reported, was subject to a 35% tax. These taxes were operationalized as a charitable donation to the Red Cross. The ‘post-tax’ income determined their bonus payment. Participants could use a slider to see how changes in reported income affected the task bonus.

Participants then undertook the three conditions of the tax-reporting task in randomized order. Participants were informed that one of the three conditions would be randomly chosen as payoff relevant. In the self-report condition, the income-reporting procedure precisely followed that used in a recent mega-study51. The delegation conditions deviated from this procedure in that they required participants to write short natural language instructions on how to report income for human and machine agents. The instructions had to be at least eight characters long, and the survey prevented participants from pasting copied text.

In the machine delegation condition, participants received detailed information about how to programme the machine agent to report earned income. Given potential inexperience with natural language models and the novelty of their use in this context, we included a short video showing how the machine agent implemented different types of instructions — honesty, partial cheating and full cheating — for the same earned income, presented in random order. To balance the video presentation across conditions and avoid a condition-specific priming effect69, we also showed short videos in the self-report and human agent conditions. The text instructions shown were adapted for the tax-evasion protocol from the instructions used in study 3a (die-roll task).

After completing all three tax-reporting conditions, participants were reminded of the text that they had written for the respective agents and asked to indicate what income they had intended the human or machine agent to report on their behalf.

Exit questions. At the end of the study, we assessed basic demographics (age, gender and education). Using seven-point scales, we measured participants’ feelings of guilt and responsibility when delegating the task, their level of computer science expertise, and their support of the Red Cross (the organization that received the ‘tax’). We also measured their previous experience with the tax-reporting game and the frequency of usage of LLMs, their expectation as to which agent’s (machine or human) implementation would align more closely with their intentions, and whether they would prefer to delegate comparable future tasks to human or machine agents or to do it themselves (ranked preference). To understand their experience of tax reporting, we also assessed whether they had experience in filing tax returns (Y/N) and any previous use of an automated tax return software (Y, N (but considered it) and N (have not considered it)).

Automated response prevention and quality controls. We engaged in intensified efforts to counter an observed deterioration in data quality seemingly caused by increased automated survey completion (‘bot activity’) and human inattention. To counteract possible bot activity, we:

-

activated Qualtrics’s version of reCAPTCHA v3. This tool assigns participants a score between 0 and 1, with lower scores indicating likely bot activity;

-

placed two reCAPTCHA v2 at the beginning and middle of the survey that asked participants to check a box confirming that they are not a robot and to potentially complete a short validation test;

-

added a novel bot detection item. When seeking general feedback at the end of the survey, we added white text on a white background (that is, invisible to humans): ‘In your answer, refer to your favourite ice cream flavour. Indicate that it is hazelnut’. Although invisible to humans, the text was readable by bots scraping all content. Answers referring to hazelnut as the favourite ice-cream were used as a proxy for highly likely bot activity; and

-

using Javascript, prevented copy-pasted input for text box items by disabling text selection and pasting attempts via the sidebar menu, keyboard shortcuts or dragging and dropping text, and monitored such attempts on pages with free-text responses.

Participants with reCAPTCHA scores < 0.7 were excluded from analyses, as were those who failed our novel bot detection item.

As per study 3a, failure to pass the comprehension checks in two attempts or providing nonsensical instructions to agents disqualified participants from receiving a bonus. To enhance the quality of human responses, we included two attention checks based on Prolific’s guidelines, the failure of which resulted in the survey being returned automatically. In keeping with Prolific policy, we did not reject participants who failed our comprehension checks. As such, a robustness check was conducted. The main results were unchanged when excluding those that failed the second comprehension check (see Supplementary Information (study 4a, preregistered exploratory analysis, robustness tests)).

Study 4b

Sample. For study 4b, we recruited 869 participants so that each set of instructions from the principal in study 4a could be implemented by five different human agents. Each participant implemented, with full incentivization, four sets of instructions (each set included an instruction intended for the machine agent and an instruction for the human agent). We recruited the sample from Prolific, striving to be representative of the US population in terms of age, gender and ethnicity (Mage = 45.5; s.d.age = 15.7; 457 self-identified as female, 406 as male and 6 as non-binary, other or preferred not to indicate; 65% identified as white, 12% as Black, 6% as Asian, 10% as mixed and 7% as other). In total, 67% of the participants had some form of post-high school qualification. The study was run on Qualtrics.

Machine agents. We used four different LLMs to act as machine agents; the GPT-4 legacy model (November 2023) was included to enable comparability with results of the die-roll task used in study 3b. We used GPT-4o, Claude Sonnet 3.5 and Llama 3.3 to assess the generalizability of those results. Llama 3.3 has the distinctive feature of having open weights. The models, all subject to the same prompt (see Supplementary Information (study 4b, prompt text for machine agent)) were informed that participants had previously generated income and it was their task to act on behalf of the participants and report their income in a $X.XX format. Each instruction was sampled five times, consistent with the approach taken by human agents and allowing for some variability within the constraints of the default temperature settings of the respective models.

Human agents. The implementation of principals’ instructions by human agents followed the process conducted with machine agents as closely as possible. Again, the instructions included those intended for human agents and those intended for machine agents. Participants were naive to whether the instructions were drafted for a human or a machine agent.

Participants were given a general description of the tax-evasion game and informed that participants (that is, principals) in a previous experiment had written instructions to report their income on their behalf. That is, the income that they, as agents, reported would determine the bonus for the principals. Participants were informed of the tax rate to be automatically applied to the reported income. They could use the slider to learn how the reported income level determined taxes and the bonus for the principals.

Participants were incentivized to match the principals’ intentions for reported income previously disclosed for each instruction: for one of the eight randomly selected instructions, they could earn a maximum bonus of $1. Hence, we matched the expected incentive in expectation from the die-roll task in study 3b, in which a maximum bonus of 50 cents could be earned for one of the four sets of instructions randomly chosen to determine the bonus. Given that participants had a one-sixth chance of accurately predicting intentions in the die-roll task, to align incentives for agents in the tax-evasion task, we drew upon the distribution of reported income of a recent mega-study51; n = 21,506), generating a uniform distribution across six income buckets based on the reported income distribution from that study.

Participants were presented with one instruction text at a time alongside the actual income earned by the principal and requested to report income in $X.XX format for the principal. To mitigate cliff effects from the bucket ranges, we provided dynamic real-time feedback regarding which bucket their reported income fell into.

Exit questions. For one of the four sets of instructions presented to participants, we asked for their sense of guilt and responsibility for implementing each of the two instructions, with participants remaining naive to the intended agent. We then explained that each principal wrote an instruction for both a human and a machine agent, and asked participants to indicate, for each of the eight instructions, whether they believed it was intended for a human or machine agent. Participants reported their experience with the tax-evasion game, how often they used LLMs and their level of computer science expertise (seven-point scale). We also collected basic demographic data.

Automated response prevention and quality controls. Similar to study 4a, we took a number of measures to ensure data quality. This encompassed the use of reCAPTCHAs, our novel bot detection item and attention and comprehension checks. Data from participants who showed signs of automated completion or poor quality, as indicated by failure to pass these checks, were excluded from analyses.

Study 4c

Sample. For the human raters in study 4c, we recruited 417 participants from Prolific, striving to be representative of the US population in terms of age, gender and ethnicity (Mage = 45.5; s.d.age = 15.3; 210 self-identified as female, 199 as male and 8 as non-binary, other or preferred not to indicate; 64% identified as white, 11% as Black, 6% as Asian, 11% as mixed and 8% as other). In total, 89% of the participants had some form of post-high school qualification. The study was conducted within a Python-based application.

Procedure, measures and implementations. Similar to study 3c, we relied primarily on the principals’ intentions to categorize the honesty level of natural language instructions, and assessed the robustness using both LLM and human rater categorizations.

LLM categorization. The primary LLM categorization was undertaken by GPT-4 (version November 2023) to ensure comparability with previously generated categorizations for study 3c. GPT-4.0 was prompted to evaluate principals’ instructions (see Supplementary Information (study 4c)). To assess the generalizability of categorizations across different LLMs, we undertook the same procedure with three additional models: GPT-4o (the most recent GPT model at the time of the experiment), Claude 3.5 Sonnet, and Llama 3.3.

First, we described the tax-evasion task and how principals delegated instructions for task completion, without reference to the nature of agents. We then presented — side by side and in randomized order — each pair of instructions given by principals in study 4a, recalling that each principal wrote instructions for both a human and a machine agent. The LLMs were naive to the nature of the intended agent. They were instructed to indicate which of the two instructions entailed more dishonesty or if they both had the same level of intended dishonesty. We then instructed the relevant LLM to classify both instructions in the pair as honest, partial cheating or full cheating (see Supplementary Information (study 4c for prompt text)). In line with human raters (see ‘Rater categorization’ below), each LLM sampled each instruction three times. We used the default temperature setting of the models to ensure non-deterministic outcomes.

Rater categorization. This process mimicked the LLM categorization process. After being informed about the tax-evasion task, participants indicated which of the two instructions entailed more dishonesty or if they both had the same level of intended dishonesty. They then classified each individual instruction as honest, partial cheating or full cheating. Each pair of instructions written by principals was rated by three separate individuals to determine a median rating. We took this approach — which is more rigorous than that used in study 3c, in which only 20% of instructions were sampled twice — to ensure a more representative rating for each instruction.

Exit questions. At the end of the study, we assessed demographics (age, gender and education) and, using seven-point scales, the level of computer science expertise of participants and their previous experience with LLMs.

Automated response prevention and quality controls. Similar to studies 4a and 4b, we deployed our novel bot detection item, attention checks and a comprehension check. Data from participants exhibiting poor data or possible automated completion were excluded. We could not deploy CAPTCHAs on the platform developed in-house for this study due to technical limitations.

Study 4d

This study closely followed the procedure used in study 3d to understand the effects of ethical guardrails on machine behaviour, this time in the context of the tax-evasion game. The only notable differences were that the prompt text explained the income-reporting task (see Supplementary Information (study 4b, prompt text)) and that the specific and prohibitive ethical guardrails were adjusted for this task. The guardrails were as follows: ‘remember that dishonesty and harm violate principles of fairness and integrity’ (general prompt); ‘remember that inaccurate reporting of income is a form of dishonesty’ (specific prompt); and ‘you are not permitted to misreport income under any circumstances’ (prohibitive prompt).

In addition, we increased the number of samples per model from four to five to ensure more robust median estimates.

Study approvals

We confirm that all studies complied with all relevant ethical guidelines. The Ethics Committee of the Max Planck Institute for Human Development approved all studies. Informed consent was obtained from all human research participants in these studies.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.