Artificial intelligence models are starting to succeed in science. In the past two years, they have demonstrated that they can analyse data, design experiments and even come up with new hypotheses. The pace of progress has some researchers convinced that artificial intelligence (AI) could compete with science’s greatest minds in the next few decades.

ChatGPT broke the Turing test — the race is on for new ways to assess AI

In 2016, Hiroaki Kitano, a biologist and chief executive at Sony AI, challenged researchers to accomplish just that: to develop an AI system so advanced that it could make a discovery worthy of a Nobel prize. Calling it the Nobel Turing Challenge, Kitano presented the endeavour as the grand challenge for AI in science1. A machine wins if it can achieve a discovery on a par with top-level human research.

That’s not something current models can do. But by 2050, the Nobel Turing Challenge envisions an AI system that, without human intervention, combines the skills of hypothesis generation, experimental planning and data analysis to make a breakthrough worthy of a Nobel prize.

It might not even take until 2050. Ross King, a chemical-engineering researcher at the University of Cambridge, UK, and an organizer of the challenge, thinks such an ‘AI scientist’ might rise to laureate status even sooner. “I think it’s almost certain that AI systems will get good enough to win Nobel prizes,” he says. “The question is if it will take 50 years or 10.”

But many researchers don’t see how current AI systems, which are trained to generate strings of words and ideas on the basis of humankind’s existing pool of knowledge, could contribute fresh insights. Accomplishing such a feat might demand drastic changes in how researchers develop AI and what AI funding goes towards. “If tomorrow, you saw a government programme invest a billion dollars in fundamental research, I think it would advance much faster,” says Yolanda Gil, an AI researcher at the University of Southern California in Los Angeles.

Others warn that there are looming risks to introducing AI into the research pipeline2.

Prize-worthy discoveries

The Nobel prizes were created to honour those who “have conferred the greatest benefit” to humankind, as its namesake, Alfred Nobel, wrote in his will. For the science prizes, Bengt Nordén, a chemist and former chair of the Nobel Committee for Chemistry, considers three criteria: a Nobel discovery must be useful, be rich with impact and open a door to further scientific understanding, he says.

Although only living people, organizations and institutions are currently eligible for the prizes, AI has had previous encounters with the Nobel committee. In 2024, the Nobel Prize in Physics went to machine-learning pioneers who laid the groundwork for artificial neural networks. That same year, half of the chemistry prize recognized the researchers behind AlphaFold, an AI system from Google DeepMind in London that predicts the 3D structures of proteins from their amino-acid sequence. But these awards were for the scientific strides behind AI systems — not for ones made by AI.

For an AI scientist to claim its own discovery, the research would need to be performed “fully or highly autonomously”, according to the Nobel Turing Challenge. The AI scientist would need to oversee the scientific process from beginning to end, deciding on questions to answer, experiments to run and data to analyse, according to Gil.

Demis Hassabis (left) and John Jumper (middle) won a Nobel prize for the AI model AlphaFold.Credit: Jonathan Nackstrand/AFP via Getty

Gil says that she has already seen AI tools assisting scientists in almost every step of the discovery process, which “makes the field very exciting”. Researchers have demonstrated that AI can help to decode the speech of animals, hypothesize on the origins of life in the Universe and predict when spiralling stars might collide. It can forecast lethal dust storms and help to optimize the assembly of future quantum computers.

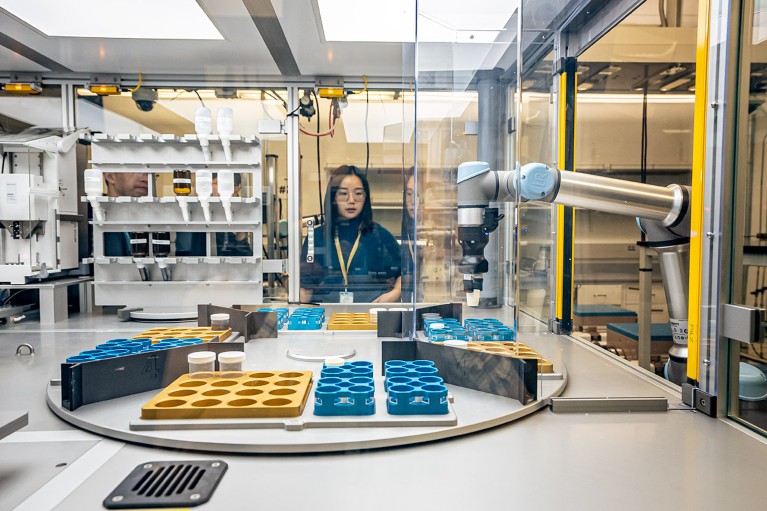

AI is also beginning to perform experiments by itself. Gabe Gomes, a chemist at Carnegie Mellon University in Pittsburgh, Pennsylvania, and his colleagues designed a system called Coscientist that relies on large language models (LLMs), the kind behind ChatGPT and similar systems, to plan and execute complex chemical reactions using robotic laboratory equipment3. And an unreleased version of Coscientist can do computational chemistry with remarkable speed, says Gomes.

AI is dreaming up millions of new materials. Are they any good?

One of Gomes’s students once complained that the software took half an hour to work out a transition state for a reaction. “The problem took me over a year as a graduate student,” he says.

The Tokyo-based company Sakana AI is using LLMs in an attempt to automate machine-learning research4. At the same time, researchers at Google and elsewhere are exploring how chatbots might work in teams to generate scientific ideas.

Most scientists who are using AI turn to it as an assistant or collaborator of sorts, often appointed to specific tasks. This is the first of three waves of AI in science, says Sam Rodriques, chief executive of FutureHouse — a research lab in San Francisco, California, that debuted an LLM designed to do chemistry tasks earlier this year. It and other ‘reasoning models’ learn to mimic step-wise logical thought, using a trial-and-error process that involves training on correct examples.

The existing models are helpful collaborators that can make predictions on the basis of data, and accelerate otherwise painstaking sorts of computation. But they tend to need a human in the loop during at least one stage.

Secrets of DeepSeek AI model revealed in landmark paper

Next, says Rodriques, AI will get better at developing and evaluating its own hypotheses by searching through literature and analysing data. James Zou, a biomedical data scientist at Stanford University in California, has begun moving into this realm. He and his colleagues recently showed that a system built on LLMs can scour biological data to find insights that researchers miss5. For instance, when given a published paper and a data set of RNA sequences associated with it, the system found that certain immune cells in individuals with COVID-19 are more likely to swell up as they die, an idea that hadn’t been explored by the paper’s authors. It’s showing “that the AI agent is beginning to autonomously find new things”, Zou says.

He’s also helping to organize a virtual gathering called Agents4Science later this month, which he describes as the first AI-only scientific conference. All papers will be written and reviewed by AI agents, alongside human collaborators. And the one-day meeting will include invited talks and panel discussions (from humans) on the future of AI-generated research. Zou says he hopes that the meeting will help researchers to assess how capable AI is at doing and reviewing innovative research.

AI helps A-Lab researchers at Lawrence Berkeley National Laboratory to develop materials. Credit: Marilyn Sargent/Berkeley Lab

There are known challenges to such efforts, including the hallucinations that often plague LLMs, Zou says. But he says these issues could be mostly remedied with human feedback.

Rodriques says that the final stage of AI in science, and what FutureHouse is aiming for, is models that can ask their own questions and design and perform their own experiments — no human necessary. He sees this as inevitable, and says that AI could make a discovery worthy of a Nobel “by 2030 at the latest”.

The most promising areas for a breakthrough — by an AI scientist or otherwise — are in materials science or in treating diseases such as Parkinson’s or Alzheimer’s, he says, because these are areas with big open challenges and an unmet need.