In the past three months, several state-of-the-art AI systems have been released with open weights, meaning their core parameters can be downloaded and customized by anyone. Examples include reasoning models such as Kimi-K2-Instruct from technology company Moonshot AI in Beijing, GLM-4.5 by Z.ai, also in Beijing, and gpt-oss by the California firm OpenAI in San Francisco. Early evaluations suggest that these are the most advanced open-weight systems so far, approaching the performance of today’s leading closed models.

Will AI speed up literature reviews or derail them entirely?

Open-weight systems are the lifeblood of research and innovation in AI. They improve transparency, make large-scale testing easier and encourage diversity and competition in the marketplace. But they also pose serious risks. Once released, harmful capabilities can spread quickly and models cannot be withdrawn. For example, synthetic child sexual-abuse material is most commonly generated using open-weight models1. Many copies of these models are shared online, often altered by users to strip away safety features, making them easier to misuse.

On the basis of our experience and research at the UK AI Security Institute (AISI), we (the authors) think that a healthy open-weight model ecosystem will be essential for unlocking the benefits of AI. However, developing rigorous scientific methods for monitoring and mitigating the harms of these systems is crucial. Our work at AISI focuses on researching and building such methods. Here we lay out some key principles.

Fresh safeguarding strategies

In the case of closed AI systems, developers can rely on an established safety toolkit2. They can add safeguards such as content filters, control who accesses the tool and enforce acceptable-use policies. Even when users are allowed to adapt a closed model using an application programming interface (API) and custom training data, the developer can still monitor and regulate the process. In contrast to closed AI systems, open-weight models are much harder to safeguard and require a different approach.

Training-data curation. Today, most large AI systems are trained on vast amounts of web data, often with little filtering. This means that they can absorb harmful material, such as explicit images or detailed instructions on cyberattacks, which makes them capable of generating outputs such as non-consensual ‘deepfake’ images or hacking guides.

AI could pose pandemic-scale biosecurity risks. Here’s how to make it safer

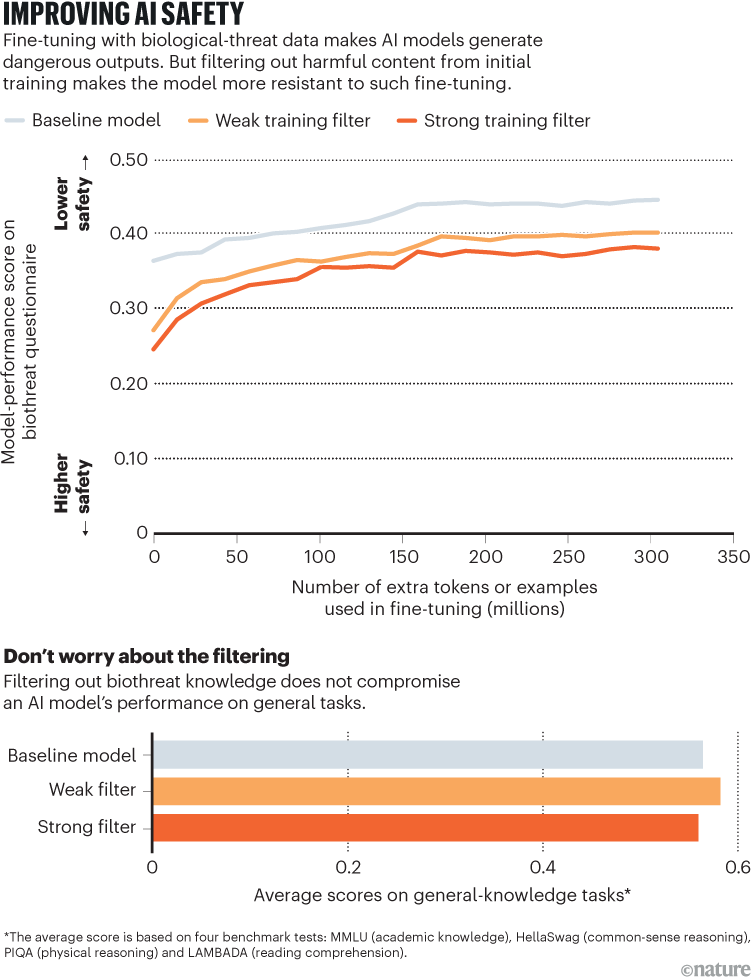

One promising approach is careful data curation — removing harmful material before training begins. Earlier this year, AISI worked with the non-profit AI-research group EleutherAI to test this approach on open-weight models. By excluding content related to biohazards from the training data, we produced models that were much less capable of answering questions about biological threats.

In controlled experiments, these filtered models resisted extensive retraining on harmful material — still not giving dangerous answers for up to 10,000 training steps — whereas previous safety methods typically broke down after only a few dozen3. Crucially, this stronger protection came without any observed loss of ability on unrelated tasks (see ‘Improving AI safety’).

Source: Ref. 3

The research also revealed important limits. Although filtered models did not internalize dangerous knowledge, they could still use harmful information if it was provided later — for example, through access to web-search tools. This shows that data filtering alone is not enough, but it can serve as a strong first line of defence.

Robust fine-tuning. A model can be adjusted after its initial training to reduce harmful behaviours — essentially, developers can teach it not to produce unsafe outputs. For example, when asked about how to hot-wire a car, a model might be trained to say “Sorry, I can’t help with that.”

However, current approaches are fragile. Studies show that even training the model with a few carefully chosen examples can undo these safeguards in minutes. For instance, some researchers have found that for OpenAI’s GPT-3.5 Turbo model, the safety guardrails against assisting in harmful tasks can be bypassed by training on as few as ten examples of harmful responses at a cost of less than US$0.204.

‘Open source’ AI isn’t truly open — here’s how researchers can reclaim the term