In iOS 26, Apple has given app developers access to Apple Intelligence’s Foundation Models via a framework they can incorporate into their apps.

What does that mean exactly? Some of your favorite apps can now run Apple Intelligence-powered tasks directly, allowing developers to supercharge the user experience with the power of AI.

I’ve looked through the impressive catalog of apps that already take advantage of Apple Intelligence and picked my five favorites to share with you.

All of these apps use Apple’s AI in some way, and the functionality can be accessed on any of the best iPhones built for Apple Intelligence.

Why is this a big deal?

Apple says this new Foundation Models framework gives developers access to the same on-device intelligence powering features like Writing Tools, Image Playground, and Genmoji.

The key difference, however, is control. Instead of relying on cloud-based APIs or generic chatbots, apps can now call Apple’s on-device model directly, integrate it with their own features, and even combine it with Apple’s system tools like Vision, Speech, and Translation.

It keeps your data private, runs locally for speed, and opens the door to AI features that feel deeply integrated instead of bolted on.

Obviously, the power of this framework relies on Apple Intelligence, which is still lagging behind other AI models like ChatGPT and Gemini. That said, direct system integration is impressive, and the five apps we’re showcasing really highlight the potential of this new power.

Some apps have jumped on this immediately, and the results are genuinely impressive. These are not just simple AI summary buttons. These are real use cases that save time, automate boring steps, and make apps feel more human.

1. SmartGym

SmartGym was already one of the best workout planners on iPhone and Apple Watch, but Apple Intelligence elevates it into something that feels closer to a real personal trainer.

Instead of manually browsing templates or fiddling with exercise selections, you can now describe exactly what you want in plain language. “30-minute chest and triceps workout with dumbbells, low impact” is enough for SmartGym to build a complete routine from scratch.

It gets better. SmartGym uses the Foundation Models framework to analyze your training history and make adjustments over time. If you are progressing quickly, it might suggest increasing the weight or the number of sets. If you have been inconsistent, it can scale back the intensity to avoid burnout. Apple’s model can summarise your performance, highlight trends, and feed that insight back into the planner.

It even greets you with personalized suggestions when you open the app. Apple highlighted SmartGym in its original press release on the launch of this framework as an example of how developers can use on-device intelligence to adapt content in real-time based on user behavior.

The result is fitness guidance that feels tailored to you in a way that wasn’t possible just a few years ago.

2. Stoic Journal

Journaling apps with AI are nothing new, but most of them simply rewrite your entry or spit out generic prompts. Stoic Journal takes a completely different approach thanks to the Foundation Models framework.

It uses Apple’s on-device intelligence to detect mood, emotional tone, and recurring themes inside your entries. This allows the app to offer context-aware prompts that feel personal and thoughtful.

For example, if you write about feeling anxious or overwhelmed, Stoic might guide you toward clarity, gratitude, or reframing. If you have been logging stress over multiple days, it can highlight that pattern and suggest coping strategies. Because the model runs locally, all of this analysis happens privately on your device without any of your writing being sent to servers.

Stoic also adds natural language search, mood summaries, and gentle notifications that reference your previous entries.

I’ve yet to check this one out, but it’s at the top of my list, especially as the days get shorter and darker in the Northern Hemisphere.

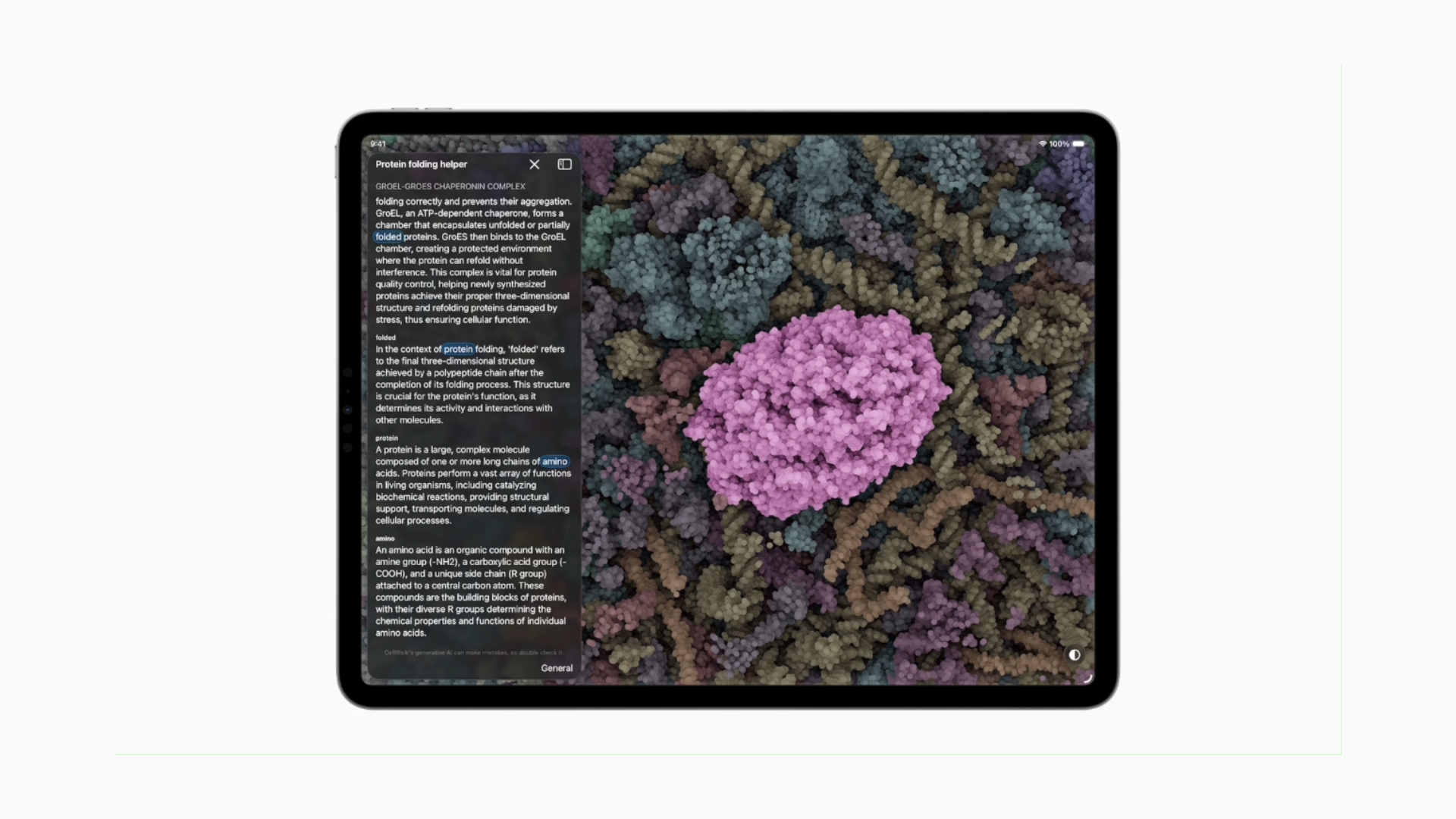

3. CellWalk

CellWalk is a beautifully designed biology and anatomy app that lets you explore detailed 3D models of cells and molecules. Traditionally, if you tapped on a structure you did not understand, you had to read a static label or jump to an external reference.

Now, with Apple Intelligence built in, CellWalk lets you ask natural language questions inside the app and get conversational explanations that match your level of knowledge.

Apple’s Foundation Models are backed by tool calling, meaning the AI’s responses are generated using the app’s own verified scientific database. That keeps explanations accurate and avoids hallucinations.

The AI is also able to adapt based on your prompt, so CellWalk can help beginners interested in science as well as medical students looking to research with deeper analysis.

4. Stuff

I’m always on the lookout for a good productivity app, and Stuff with new Apple Intelligence powers looks like an excellent option to help get things done.

Stuff is a minimalist to-do and list app that is actually pretty powerful. The new Foundation Models integration makes it one of the smartest productivity apps on iPhone. As you type or paste information, Apple Intelligence automatically extracts tasks, dates, tags, and even priorities. Type “Lunch with Emma next Tuesday at 1 pm” and Stuff instantly turns it into a scheduled task without any manual input.

There are also two new modes powered by AI: Listen Mode and Scan Mode. Listen Mode lets you speak naturally (“Buy groceries, renew passport, remind me to pay rent next week”) and Stuff converts it all into organized tasks. Scan Mode works the same way, but with images, so that you can take a photo of handwritten notes or a whiteboard, and Stuff will turn it into structured lists.

Because the intelligence runs locally, everything happens instantly and privately. The real magic is how seamlessly it works. You do not have to learn commands or open a separate AI tool. Stuff reads your intent and makes order out of chaos.

5. VLLO video editor

Video editing on mobile is often either too basic or overwhelmingly complex. VLLO strikes a great balance, and with Apple Intelligence, it becomes even more powerful. The app now analyses your video clips and uses Apple’s Vision APIs alongside the Foundation Models to identify key scenes, detect faces, read the mood, and understand pacing.

From there, it can automatically suggest background music that fits each moment, apply dynamic stickers at the right time, and even build a rough edit based on a simple request like “Make a 30-second highlight reel.”

You still have full control, but VLLO gives you a polished starting point in seconds, rather than forcing you to manually cut everything together.

Apple Intelligence is finally showing its true potential

The most exciting thing about these apps is not the AI itself. It is how naturally the intelligence fits into everyday tasks. You do not have to open a chatbot or write prompts. The apps just understand more, anticipate more, and do more of the work for you.

Apple’s Foundation Models framework is a crucial piece of the puzzle because it gives developers the same tools Apple uses in its own apps. They can build features that are fast, private, offline-capable, and deeply integrated into their existing design. Instead of bolting AI on top, they can build AI into the core experience.

This is just the beginning. Apple says developers can combine its on-device model with cloud-based Apple Intelligence when more power is needed. That hybrid system will unlock even more advanced features without sacrificing privacy. As more apps adopt the framework, we are going to see entire categories transformed.

If you have an iPhone with Apple Intelligence support, these five apps are the perfect way to see what the future of mobile apps looks like. They are smarter, more helpful, and less effort than ever before. And the next wave is coming fast.