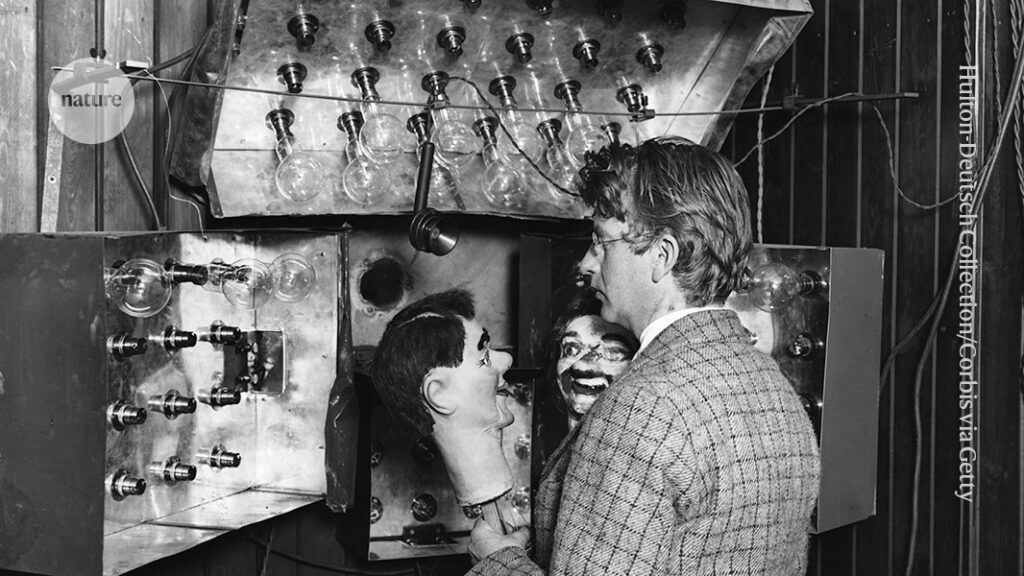

Engineer John Logie Baird used ventriloquist dummies in early experiments of his invention: the television.Credit: Hulton-Deutsch Collection/Corbis via Getty

Long before modern technology, the principle of the common good existed. It was not born with society itself, but later, with an awareness that if it were neglected, a society might collapse.

Principles that promote social cohesion and well-being are found in most cultures. Early examples include the millennia-old Code of Hammurabi, which punished certain liars with a humiliating haircut1, and ancient Biblical law, which required landowners to leave part of their harvest for orphans, widows, foreigners and poor people.

Supportive? Addictive? Abusive? How AI companions affect our mental health

The real difficulty has been recognizing the boundaries that humans tend to draw when applying such codes. Do we include others, or only our species, culture, country or family? That ambiguity is where the problems begin.

Lack of commitment to the common good is evident in many of today’s challenges — conflict, inequality, humanitarian crises and environmental degradation. And now, it clearly extends beyond the physical world, to the digital universe, in which people spend an ever-growing portion of their lives.

It’s a good time to take stock, 100 years after inventor John Logie Baird successfully transmitted human faces through television, paving the way for today’s countless streams of online content, shaped by algorithms and artificial intelligence (AI).

Are we, as a society, ensuring that communication technologies preserve the common good, or at least do not harm it? I think we’re not, but we should. Here, I set out a manifesto calling for algorithms to be designed and used with the common good in mind.

New media, old problem

In the twentieth century, television stood out as one of the main technologies shaping people’s perception of reality. People nowadays associate the word ‘television’ with the device, but when the Russian scientist Constantin Perskyi first used the term in 1900, he was naming a broader idea: the transmission of images at a distance2.

Building on that concept, the world of images that now surrounds us — from streaming to social media — can be considered as just one more evolution of television. Technological progress is clear, but social progress is stagnant.

A man’s face transmitted in a public demonstration of television in 1926.Credit: Ann Ronan Pictures/Print Collector/Getty

In the early days of television, despite the many private interests surrounding it, there were concrete efforts, in several regions at least, to steer it towards the common good. Broadcasts were guided by principles such as public interest and public service.

Now, however, these pursuits are increasingly being held hostage to algorithms, AI and a handful of private companies (legitimately driven by self-interest and profit). In many ways, this is far from what people experienced in the past century. Yet the pursuit of the common good must remain. After all — and this is not mere rhetoric — our future depends on it.

Fortunately, we have acquired a century of learning.

The great rewiring: is social media really behind an epidemic of teenage mental illness?

The habit of seeing images from afar is not new — it has been part of humanity since the first expressions of imagination and storytelling. Fireside stories and artworks in ancient times, for example, reveal not only the earliest seeds of television — in images conjured by the imagination — but also their dual potential. They either served the public by reinforcing social values and creating shared references, or they neglected that role in favour of private interests, by fostering exclusion or spreading misinformation.

Television, and what followed, merely technologized this practice. On 2 October 1925, Baird reported a symbolic moment. He transmitted, in greyscale, the recognizable image of a face — first, a dummy’s head, and then the face of a young man called William Taynton. This was the first time that such a feat had been achieved3.

From 1925 to 1960, television technology consolidated and acquired a social dimension. As more people used it, concerns arose about its role. Two models emerged, which shaped the evolution of television around the world, and drew on principles already established for radio, such as the BBC Royal Charter and the US Radio Act of 1927.

The public-service model — focused on citizens — aims to always promote social cohesion and well-being. This model was favoured in the United Kingdom, and still drives the ethos of the BBC (British Broadcasting Corporation).

Crowds gathered to watch the Apollo 11 Moon landing in 1969 on large television screens. Credit: CBS Photo Archive/Getty

The commercial model — focused on profit — aims not to compromise those values in the pursuit of gain. The National Broadcasting Company (NBC) and the Columbia Broadcasting System (CBS) pioneered this service in the United States. Even using the commercial model, working for the common good cannot be ignored. Because these companies operate inside society, they are also expected to act in the public interest.

The lesson is this: it is up to society to ensure, through regulation, not only that the main players shaping our digital landscape do no harm, but also that they ‘pay back’ for occupying social space, through concrete actions in favour of the common good, at a local or global scale.

From 1960 to 1990, the television set became one of the most influential objects in the world. A common idea of global reality began to take hold through mass audiences that watched global events, such as the 1969 Apollo 11 Moon landing and news reports from the Vietnam War. Yet, as the United Nations cultural organization UNESCO warned in a 1980 report4, the vision people got was sometimes incomplete, filtered by the producing countries. Although controversial then, from today’s perspective, this criticism seems less like calling out censorship and more like a plea for fairness.

Why we need mandatory safeguards for emotionally responsive AI

A further lesson can be drawn: a freer, more globally connected and technologically dependent world must not seek to homogenize everything and everyone, but to ensure that all differences are seen, respected and heard.

From 1990 to 2010, the generalist logic of television, in which large mixed audiences receive the same programming, was increasingly replaced by diverse and specialized interests in production and consumption — a trend that was tremendously amplified by the popularization of the Internet. It was the start of the transition to our current world, which is awash with information. Yet, as UNESCO also noted in 20055, one challenge was to ensure that all this information was not only accessible and respectful of freedom of expression, but also transformed into knowledge that benefited people and societies.

Today, this remains a crucial challenge. Free access to the digital ecosystem and all that it offers is not enough. It is equally essential to ensure that its use serves the good of both the individual and the collective.

From 2010 to 2025, users of smartphones and people with Internet access have increasingly shaped the media. To enhance that experience, other protagonists entered the scene, notably social-media algorithms and AI tools. Ideas, generality and predictability gradually gave way to emotion, personalization and randomness. The sense of community shifted: it no longer depends so much on physical space, but on shared interests, habits and online content.

The digital world is no longer separate from everyday life — it is officially part of it, with access required for schools, public services, banking and work. That changes everything. As UNESCO reminded us in 2023, respect for human rights must be the fuel of the digital world, not left at the doorstep6.

The concern of a century ago is not so different from now: to give social and democratic meaning to the images that surround us. In the past, those images were shaped by human hands. Today, non-human agents have entered the scene, filtering, organizing and directing much of what we see, read and hear.

We need a new ethics for a world of AI agents

At this stage, the most important question might no longer be: where is technological progress heading? Rather, after all this technological advancement, where does social and democratic progress stand? Is the idea of public service still included in social media and AI today?